Multicore system

• CPU

with more than one core

• Cores

operate as separate processor within a single chip

• Increase

performance without raising the processor clock speed

Multiprocessor system

• Have

more than one CPU

• Some

machine combines two technologies, multicore and multiprocessor

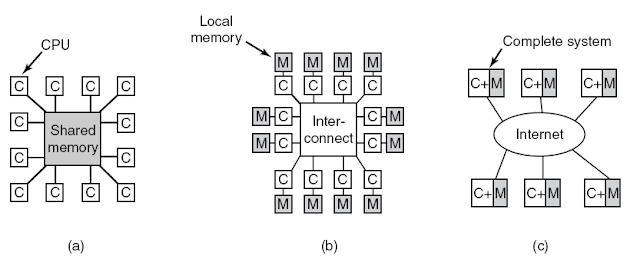

(a) A shared-memory multiprocessor. (b) A

message-passing multicomputer. (c) A wide area distributed system.

•

Multiprocessor

Systems are classified according to the manner of associating CPUs and memory units

–

Uniform memory access (UMA) architecture

•

Previously

called tightly coupled multiprocessor

•

Also

called symmetrical multiprocessor (SMP)

•

Examples:

Balance system and VAX 8800

–

Nonuniform memory access (NUMA) architecture

•

Examples:

HP AlphaServer and IBMNUMA-Q

–

No-remote-memory-access (NORMA) architecture

•

Example:

Hypercube system by Intel

•

Is

actually a distributed system (discussed later)

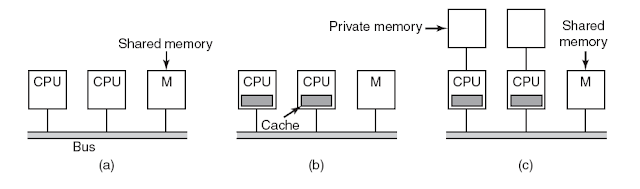

Three bus-based multiprocessors. (a)

Without caching. (b) With caching. (c) With caching and private memories.

•

Unified

Memory Access (UMA)

–

also

known as Symmetric MultiProcessors (SMP)

•

Non-Unified

Memory Access (NUMA)

•

SIMILARITIES

–

single

memory space

–

pitfall:

Compared between shared memory and distributed memory.

•

DIFFERENT

–

access

time

–

#

of processors

–

bus

vs. network implementation

•

SISD

(Single Instruction Single Data)

•

Uniprocessors

•

MISD

(Multiple Instruction Single Data)

•

Stream

based processing

•

SIMD

(Single Instruction Multiple Data = DLP)

•

Examples:

Illiac-IV, CM-2 (Thinking Machines), Xetal (Philips), Imagine (Stanford),

Vector machines, Cell architecture (Sony)

•

Simple

programming model

•

Low

overhead

•

MIMD

(Multiple Instruction Multiple Data)

•

Examples:

Sun Enterprise 5000, Cray T3D, SGI

Origin,

Multi-core Pentiums, and many more….

Multi-core Pentiums, and many more….

• Figure 8-10. The TSL instruction can

fail if the bus cannot be locked. These four steps show a sequence of events

where the failure is demonstrated.

• Figure 8-11. Use of multiple locks

to avoid cache thrashing.

Figure

8-12. Using a single data structure for scheduling a multiprocessor.

The three

parts of gang scheduling:

- Groups of related threads are scheduled as a unit, a gang.

- All members of a gang run simultaneously, on different timeshared CPUs.

- All gang members start and end their time slices together.

• Figure 8-14. Communication between

two threads belonging to thread A that are running out of phase.

Characteristics of Embedded System

• Real-time operation:

– In many embedded systems, the correctness

of a computation depends, in part, on the time at which it is

delivered. Often, real-time. Constraints are dictated by external I/O and control stability

requirements.

• Reactive operation:

– Embedded software may execute in

response to external events. If these events do not occur

periodically or at predictable intervals, the embedded software may need to take

into account worst-case conditions and set priorities for execution of

routines.

• Configurability:

• Because of the large variety of

embedded systems, there is a large variation in the requirements, both

qualitative and quantitative,

for embedded OS functionalityfor execution of routines.

• I/O device flexibility:

• There is virtually no device that

needs to be supported by all versions of the OS, and the range of I/O

devices is large.

• Streamlined protection mechanisms:

• Embedded systems are typically

designed for a limited, well-defined functionality

• Direct use of interrupts:

• General-purpose operating systems typically do

not permit any user process to use interrupts directly

Purpose Built Embedded Operating System

• A significant number of operating

systems have been designed from the ground up for embedded applications. Two

prominent examples of this latter approach are eCos and TinyOS.

Specialized Embedded System

• Typically

include

– Has a fast and lightweight process

or thread switch

– Scheduling policy is real time and

dispatcher module is part of scheduler instead of separate component.

– Has a small size

– Responds to external interrupts

quickly; typical requirement is response time of less than 10 μs

– Minimizes intervals during which

interrupts are disabled

– Provides fixed or variable-sized

partitions for memory management as well as the ability to lock code and data in

memory

– Provides special sequential files

that can accumulate data at a fast rate To deal with timing constraints, the

kernel provides bounded

execution time for most primitives

– Maintains a real-time clock

eCos

• eCOS

– Embedded Configurable Operating System

• Open

Source, royalty free, real time O/S for embedded application

Configurability

• eCos configuration tool, which runs

on Windows or Linux, is used to configure an eCos package to run on a target

embedded system

• eCos package is structured

hierarchically

eCos Component

• HAL

– Harwarde Abstraction Layer

– HAL is software that presents a

consistent API to the upper layers and maps upper-layer operations onto a

specific hardware platform

• eCos

Kernel

– Designed

to meet the following objectives:

• Low interrupt latency: The time it

takes to respond to an interrupt and begin executing an ISR.

• Low task switching latency: The time

it takes from when a thread becomes available to when actual execution

begins.

• Small memory footprint: Memory

resources for both program and data are kept to a minimum by allowing all

components to configure memory as needed.

• Deterministic behavior: Throughout

all aspect of execution, the kernels performance must be predictable and

bounded to meet real-time application requirements.

eCos Scheduler

• Bitmap

Sheduler

– A bitmap scheduler supports multiple

priority levels, but only one thread can exist at each priority level at

any given time.

• Multilevel

Queue Scheduler

– Supports

up to 32 priority levels

– allows for multiple active threads

at each priority level, limited only by system resources

Client Server Computing

• client/server environment is populated by clients and servers

• Each server in the

client/server environment provides a set of shared services to the

clients

• Client/server computing is typically

distributed

• Computing

Client Server Envronment

Client Server Architecture

Three tiered Client Server Architecture

Middleware

• Middleware

provides a layer of software tat enables uniform access to different systems

Service Oriented Architecture

• A

form f client/server architecture

• Organizes

business functions into modular structure

• Conssts

of a set of services and a set of client applications that uses the services

SOA Architectural components

• Three

types

– Service

Provider

• A

network node that provides a service interface for a software asset that

manages a specific set of tasks

– Service

requestor

• A

network node that discovers and invokees other software services to provide a

business solution

– Service

Broker

• A

specific kind of service that acts as a registry and allows for the lookup of

service provider interfaces and service locations

Clustering

• An

approac to provide high performance and high availability

• A

cluster is a group of interconnected, whole computer working together as a

unified computing resource (act as one machine)

Benefits of clustering

• Absolute scalability: It is possible to create large

clusters that far surpass the power of even the largest stand-alone machines.

A cluster can have dozens or even hundreds of machines, each of which is a

multiprocessor.

• Incremental scalability: A cluster is configured in such a

way that it is possible to add new systems to the cluster in small

increments. Thus, a user can start out with a modest system and expand

it as needs grow, without having to go through a major upgrade in which an

existing small system is replaced with a larger system.

• High availability: Because each node in a cluster is a

stand-alone computer, the failure of one node does not mean loss of

service. In many products, fault tolerance is handled automatically

in software.

• Superior price/performance: By using commodity building blocks,

it is possible to put together a cluster with equal or greater

computing power than a single large machine, at much lower cost.

Two nodes cluster

Shared Disk Cluster

Cluster Method – benefits and limitations

Design Issues

• Failure

Management

• Load

Balancing

• Parallelizing

Computation

Cluster Computer Architecture

No comments:

Post a Comment